Challenge of our generation: reproducible, transparent and reliable science

Symposium summary:

ISBE 2016, 3rd Aug, University of Exeter UK

This post was first published on the Mozilla Science Lab blog

When approached to help organise a post-conference symposium on reproducibility in science at the International Symposium on Behavioural Ecology, I jumped at the opportunity! The symposium was the brainchild of Malika Ihle and Isabel Winney, both post-docs here at the University of Sheffield at the time. As early career scientists, we share feelings of concern and disillusionment by the apparent reproducibility crisis engulfing our profession. At the same time we feel excited and inspired by new tools and practices emerging in response and the vision of what next generation science could (and in our opinion should be): open, transparent, collaborative, web-powered!

The idea behind the symposium was to use the opportunity to take stock of the state of the field with respect to reproducibility, explore potential solutions, hear the experiences of researchers trying to incorporate them into their workflows and provide participants with the opportunity to learn some of the tools and skills discussed. Malika and Isabel had managed to secure a great roster of speakers and we also managed to engage Mike Croucher, EPSRC Research Software Engineering Fellow here at the University of Sheffield, to help put together some of the practical training material.

My own interest in the subject comes from a deep love of working with data and past experiences outside academia. Working as a quality assurance auditor for a contract research organisation operating to OECD good laboratory practices, I got first hand experience of the ubiquity of human error and the lengths required to ensure quality of results. In my next job as a brand coordinator for an extreme sports equipment distributor, I continued to suffer the consequences of errors in data. In the grand scheme of things, it was not the end of the world if the item showing on the system as last in stock and promised to a dealer for next day delivery was in fact out of stock. But it was pretty awkward having to ring them and tell them! So there was strong incentive to check and double check before making promises.

Don’t get me wrong, I love the spirit of discovery afforded by academia. I remember thinking when I first started my PhD:

‘Wow, love the creative freedom in academia!’

soon to be followed, however, by:

‘Hmmm, no one’s really checking anything. Bit sketchy…’

I feel lucky to have developed strong coding skills during my PhD in macroecology and providing what might be classed as research software engineering services as a freelancer since. As such, I recognise that the code and data I produce are my main scientific outputs and have enthusiastically embraced practices required to curate them and help the teams I work with access them. So, I agreed to prepare a couple of workshops on the tools and skills I have found most useful. First thing to do was secure some funding as my freelancing would not cover my time or costs. Luckily, the lovely folks at Mozilla Science Lab stepped-in to support me and also supply some great swag on the day!

…to the symposium

From what I could gather on twitter, the 6-day conference prior to the symposium had been a great success. So Mike and I headed down to join the last day of the 1,500 strong gathering of behavioural ecologists. We were excited to arrive and find great facilities for the workshops and a decent turn-out.

Off to exter with @annakrystalli to spread the word about git, @rstudio and rmarkdown to ecologists.

— Mike Croucher (@walkingrandomly) August 2, 2016

roots of the problem

The day kicked off with some much needed self-reflection, through a series of talks on the many elements contributing to our current reproducibility woes.

self-deception

Shakti Lamba (@lamba_shakti) kicked off with tales of self-deception. She discussed their findings from a recent paper in which they provided the first direct evidence suggesting that self-deception may have evolved to facilitate the deception of others.

Overconfident individuals are overrated by observers and underconfident individuals are judged by observers to be worse than they actually are. Our findings suggest that people may not always reward the more accomplished individual but rather the more self-deceived. Moreover, if overconfident individuals are more likely to be risk-prone then by promoting them we may be creating institutions… more vulnerable to risk.

So,

fooling oneself helps fool others.

and if careers are built on convincing others of the value of one’s work, it’s not hard to see how incentives for overconfidence might emerge.

the trap of false-positives: (p-)hacking our way to publication success

But what does deception of any kind have to do with science? We use statistics, peer review etc. to ensure that the knowledge we create is robust and objective, right? Well, the next few talks exposed the many ways in which our current model of academia is allowing for much deception, of self and others!

Wolfgang Forstmeier explained how the proliferation of a particular kind of deception, type I errors a.k.a. false positive results, can emerge. Firstly, a certain amount of false positives are expected purely by chance. This proportion increases in fields with underpowered studies or highly unlikely hypothesis while the absolute number increases with the number of hypotheses being tested. The situation is exacerbated further by the lower rates of publication of negative or null results. Here’s a great video explaining some of these points:

More importantly, with statistical significance (ie p-value < 0.05) as the holy grail for publication, practices inflating false positives are inadvertently selected for. Such practices involve ‘torturing the data until it confesses’ and can include:

- p-hacking: post-analysis unreported and unsubstantiated decisions on inclusion/exclusion of data/variables/treatment groups/analyses methods.

- over-fitting models, model fishing, and HARKing (generating hypotheses after results are known)

- starting with (or changing to) a less likely hypothesis (since an unlikely hypothesis should be tested with higher power / to a more stringent threshold),

- non-independent data.

Forstmeier: data torture and researcher df massively inflates type 1 error #ISBE_Exeter #openscience @annakrystalli pic.twitter.com/6gjQssnSFc

— Isabel Winney (@IsabelWinney) August 3, 2016

Megan Head delved a bit deeper into the practices and extent of p-hacking, discussing their text mining study of the distribution p-values across the literature. An increase in the relative frequency of p-values just below 0.05 is suggestive of p-hacking and their study found such a pattern to be widespread across many scientific disciplines.

In jest, we all thanked Megan for the tips and tricks on increasing our chances of a positive result and therefore publication. It reminded me of an R-bloggers post introducing the p-hacker app an app designed to ‘help data confess’ by allowing users to ‘practice creative data analysis and polish their p-values’. While the app is obviously a joke, playing around with it really does hammer home how easy it is to craft a significant result.

blinded by not blinding

Luke Holman focused on their examination of another common form of self-deception, observer bias. Such researcher effects…

… are strongest when researchers expect a particular result, are measuring subjective variables, and have an incentive to produce data that confirm predictions.

In their study of the extent of ‘working blind’ to guard against such researcher effects however, they found evidence that blind protocols are uncommon in the life sciences. Even more worrying, non-blind studies tended to report higher effect sizes and more significant p-values.

research workflow domino fail

Finally, quite separate from the statistical issues explored above, lies an even more basic one. As our research becomes more computational, can we even guarantee that we are even answering the questions we think we are? Enter Mike Croucher with his highly entertaining exposition of current research workflows from a research software engineering (RSE) perspective. He explained the reasons we should be increasingly minding the state of our computational workflows which should ideally be set up like dominos and flow nicely from start to finish when set into motion. A solid automated workflow setup is far more robust, efficient and transparent.

The reality of our workflows however are often more like this:

often getting stuck or requiring manual interventions and parameter inputs. This inevitably increases opportunity for error or loss of provenance. More worryingly, it can often mean we are not even asking the questions we think we are, a whole other level of self deception!

To be fair to researchers, this is completely understandable, as we often have limited formal training in techniques or standards. It does however highlight the need for increased computational training and support, particularly through an emerging role for Research Software Engineers. Ultimately, our goal should be to empower researchers with skills to harness and develop them rather than be buried by technological advances.

The main take away from Mike’s talk can be summarised by Croucher’s Law:

PROBLEM: I AM AN IDIOT AND WILL MAKE MISTAKES

and the partial solutions to this problem are:

- Automate (aka learn to program)

- Write code in a (very) high-level language

- Get some training

- Use version control

- Get a code buddy (Maybe an RSE!)

- Share your code and data openly

- Write tests

A predictable predicament

It is becoming clear that the elements of the reproducibility crisis are actually fully predictable given our innate tendencies for bias, and the incentive system we are faced with.

Importantly:

This dynamic requires no conscious strategizing—no deliberate cheating nor loafing—by scientists, only that publication is a principle factor for career advancement. (Smaldino & McElreath, 2016)

Collectively these issues manifest themselves as unreliable science, casting doubt on our paradigms and reducing confidence in our field.

The key to addressing the reproducibility crisis is open and transparent discourse on our research, right from the start. Pre-registration of hypotheses and the analyses proposed to test them can go a long way towards reducing data torture further down the line whilst blinding can help address subjectivity in data collection and decision-making. Additionally, transparency and good documentation of ongoing research will allow us to assess results against what researchers really did rather than what they said (or even think!) they did. It is important to note that in discussions, we all recognised the value of exploratory data analysis, so long as they are clearly labelled as such. Importantly, as Forstmeier noted, we need to stop treating statistical significance as a research goal but rather assess work on robustness, transparency and objectivity.

Admittedly, it’s not easy to move beyond valid considerations of how the cost in terms of time and efforts required by reproducibility will be borne onto the academic. Currently, the costs fall too heavily on researchers, with no formal training on any of the required skills. We need help in the form of RSEs, of library data managers, of a more diverse evaluation frameworks for scientific output and a means to reap recognition for contributions to reproducibility. As individual researchers however, we must start taking some responsibility for the state of the field, and begin to demand some standards. However, this should come hand in-hand with a spirit of more constructive and open scientific discourse. Instead of finger pointing, we should be cultivating an environment where we collectively work to make our outputs more robust.

practical solutions

While we could not address the external elements of a broken incentive structure during the symposium, we really wanted participants to hear inspiring examples from their field from researchers that had begun to integrate reproducibility elements into their workflow. As such, we hoped to demonstrate the intrinsic value of reproducibility, debunk some myths associated with resistance to it and leave participants with some ideas and tools to start experimenting.

unblinded by seeing

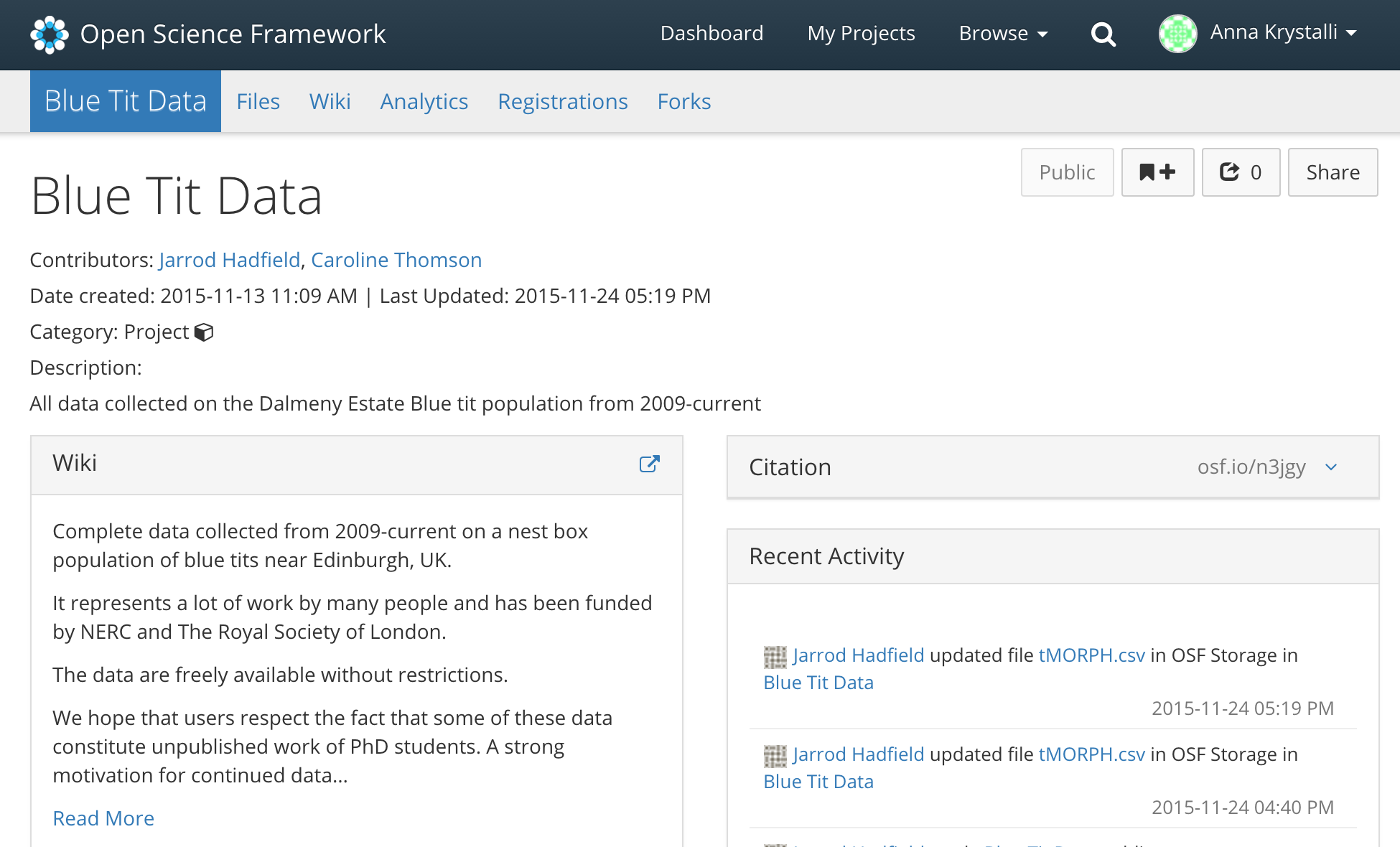

Shinichi Nakagawa gave us a demo of the features of the Open Science Framework (OSF). Created by the Center for Open Science, a non-profit dedicated to supporting researchers in more reproducibility, the OSF is an online portal enabling researchers to centralise and manage project materials throughout project life cycle.

I’d heard of the initiative but admittedly I had not tested it out yet. Inspired by the demo, it was the first thing I tried out by setting up my last two projects. It took me about 5 minutes to gather the materials from the github and googledrive folders that held the data and code for each and and half an hour to set them both up with wikis and couldn’t wait to share it with my collaborators.

open data and the non-end of a postgrad’s world

Next up, Caroline Thomson talked about her experience opening up a long term dataset on blue tits on the OSF, 6 months before the end of here PhD.

While the data are freely available, they encourage a number of practices to both protect their data collection efforts as well as promote reproducibility and openness. For example they ask users to pre-register their intended use of the data to ensure that the data we have worked hard to collect is used to generate robust scientific findings. They also request submission of analysis scripts upon publication to enable validation of earlier results as new data become available.

The most important point of her talk was that, since publication, the dreaded hoard of data vultures, prowling to scoop publication from open research data did not materialise and her planned publication are safe. In fact, the only people using the data so far is her own research group. So was the extra data management effort required to maintain the open data set worth it then? Caroline’s answer was emphatic. The benefits in terms of their own internal reproducibility and re-use far outweighed any data management cost incurred. And while the data may not have instantly flown off the shelves, it has a much higher chance of increased impact by being tidy and open.

the article is the advertising

Martin Bulla described his experiences trying but failing to replicate results in a previously published study. First he doubted himself, but hard as he tried, he could not find the source of the discrepancy in his experimental design or analysis. So he asked for the data and code from the original investigators and, lo and behold, found the bone of contention: a single line of code, omitted from the description of the analysis in the paper violated assumptions. When corrected the effect in the original paper disappeared.

Martin clearly demonstrated the importance of transparency, of data and code versus the paper and made me think of a quote mentioned in a lesson from the Open Science and Reproducible Research course that really stuck with me:

An article about computational science in a scientific publication is not the scholarship itself, it is merely advertising of the scholarship. The actual scholarship is the complete software development environment and the complete set of instructions which generated the figures. Buckheit & Donoho 1995, paraphrasing Jon Claerbout

Controversial? Maybe, but I tend to agree. As Martin’s work aptly demonstrated, papers can and will be refuted as more data become available or errors in analyses uncovered. But the value of well-curated data and code, ready to be re-used or re-evaluated still stands. So why do we spend the majority of our efforts curating the advertisement?

research workflow domino win

Based on materials provided by Susan Johnston (@SuseJohnston), Malika wrapped up this portion of the day by describing the elements of a modern open reproducible science workflow, perfectly encapsulated by this fine video by @ibartomeus

The key themes emerging around is:

PREPARE IT TO SHARE IT!

Benefits include: * significant improvement and refinement of code functionality * errors detection and greater quality control * easier in-house reuse * easier on boarding of new collaborators * greater exposure and impact of work

For me, the most interesting points to emerge from these sessions was that researchers already involved in reproducibility had a different philosophy about their work. They view themselves as public servants, with a duty to produce high quality outputs that are freely accessible. They acknowledge that the needs of science as a whole should not continue to be sacrificed in order to protect the careers of individual scientists (including their own) and are eager to stop competing behind closed doors and start collaborating in the open. This makes me hopeful that adoption of the practices has great potential to contribute from the bottom up to the cultural and eventual institutional change required and really build a new and improved kind of science.

hands-on skills

The afternoon sessions were designed to give attendees a basic starting point from which they could begin to integrate practice into their own workflows. The sessions focused on introducing participants to version control and collaborative coding through GitHub, to literate programming through markdown, and to implementing everything through Rstudio.

We focused on these tools not only because they form the core of private reproducibility but because they also allow for culture change towards more open and collaborative web-powered research. Our overarching goal was to inspire by what was possible! The materials for all three workshops can be found here.

Github through RStudio

The first session aimed to introduce using Github and Rstudio for version control and was run by Mike in a code cafe style, in which participants worked individually through the material with us at hand to help if they get stuck. It aimed to start completely from scratch including all required software installation and by the end, participants had set up their first github repo and linked it to an Rstudio project.

Heads down and working hard at @walkingrandomly RStudio and Git workshop at #ISBE_Exeter #openscience @annakrystalli pic.twitter.com/pjVVvVTmHp

— Isabel Winney (@IsabelWinney) August 3, 2016

Introduction to Rmarkdown

In the Rmarkdown session I wanted to introduce the benefits of literate programming, ie documenting code to be read and understood by humans. But beyond documenting code I wanted to show how versatile these tools are in linking research materials into streams of evidence, producing interactive content and sharing it easily with the world.

Collaborative github through RStudio

In the last practical session of the day, we tackled collaborative use of Github. The task for the session was for participants to fork and clone a repository, create a file supplying parameters defining brownian evolution of a trait for their imaginary species, commit, push and merge to the original repo. Once all species parameters were collated in the original repo, we plotted them all up together!

last thoughts

The most encouraging moment of the whole day came when Sajesh Vijayan and Aparajitha Ramesh, two masters students that had sat through the whole day enthusiastically, approached us at the end and said:

‘That was great! We should be learning this stuff as undergraduates!’

Thank you @IsabelWinney @annakrystalli @walkingrandomly and Malika!!! Had the most amazing day!! Superbly designed symposium!!#ISBE_Exeter

— Sajesh Vijayan (@sajeshksv) August 3, 2016

And I totally agree! I think we completely underestimate what a young generation of scientists, armed with reproducibility skills and set to higher standards from the beginning of their careers, will be able to achieve.

The proceedings and discussions generated by the symposium were published as an ‘Invited Idea’ in the Journal of Behavioural Ecology.